Here’s the good news: we, those who promoted open web standards, have won! The web of today uses less and less closed technology and plugins and HTML, CSS and JavaScript are the tools used to create a lot of great web experiences.

For example nearly nobody uses Flash for a simple image gallery and more and more companies advertise themselves to us and also to the world as “supporting web standards” and “using open technology”.

Of course, there is a lot of lip service going on and – as Bruce Lawson put it we get HTML5, hollow demos and forgetting the basics. Bruce points out that a lot of HTML5 demos don’t have any semantic markup, don’t even create working links and have a lot of traits we’ve seen in Flash tunnels in the nineties and beginning of the millennium.

On the other side of this argument, a few people keep telling me they are working on blog posts on “why semantic markup and JavaScript fallbacks are not important any longer”.

I think there is a happy middle ground to be found and it mostly means that we need to understand the following: what you do as a web developer is very much dependent on the medium our work is consumed in.

How we use the internet has changed over the years and if we don’t want to be seen as enemies of progress we need to alter our best practices and give them a bit more flexibility when it comes to applying them.

Much like a web site looking and working the same in every browser means catering to the lowest common denominator and not using our platforms smartly having a “one stack of technologies used in a certain fashion” limits us in reaching people who just start on the web and simply want to get some work done.

Are our best practices really rooted in reality?

Altering best practices? How is that possible? Well, for starters I think a lot of what we preach is cargo cult rather than based on real happenings. A lot of the things we tell people “are absolutely necessary to make something work on the web” are not needed and many an excited explanation of the usefulness of semantic markup actually is not based on facts. A lot of what we do as best practices is done for us, not for the end users or the technology we use. But more on that later.

Looking back (not in anger)

How did we get to where we are now, where newly built showcase sites violate the simplest concepts like providing alternative text for an image or using structured HTML rather than a few empty DIVs?

In order to learn how we got into this perceived mess it is important to understand what we did in the past. A lot of talks and books and posts paint a picture of the brave new world of web standards brazenly cutting a path through the jungle of closed technology towards a bright future. This is far from what really happened. If we are honest, a lot of what we did was hacking around to make things work and then trying to find a way to make what we did sustainable. And that last step is what brought us semantics. When I hear praises of POSH – plain old semantic HTML as the way we built things in the past and forgot that skill over time I have to snigger. We did not do any of the sort – at least not in production.

Humble beginnings – HTML and CGI

In the beginning there were no plugins and there was no JavaScript. We had HTML and images and the biggest mistake people already did was show text as images without any alternative text. This means that text-only browsers (which were still in use) and those on slow connections had the short end of the stick. Interaction was defined as clicking on links and submitting forms.

What we already started to try was to speed things up by using frames. For example we kept a “sticky navigation” and only loaded content pages without any menus. This was the start of breaking basic browser functionality like bookmarking for the sake of performance. I remember working around that using cookies to store the state of the page and re-write the frameset accordingly on subsequent visits. That only fixed it for the current user – sending a link out to others was not possible any more. But the pages loaded much faster.

Layout was achieved in HTML - with horizontal lines, PRE elements, lots of and tables. It was most important to make the thing look right across all the browsers and not what the HTML really is.

What we tell people instead though is that these were simpler times where the HTML and its semantic value really mattered. I remember it differently.

DHTML days (1)

When JavaScript got supported we started to go properly nuts. Whole menus were written out with document.write() and we used popup windows with frames written dynamically inside them (for example for image galleries):

We even started checking which browser was in use and – in the more sensible cases – rendered different experiences. In the lesser thought-out solutions we just told people that “This site needs Internet Explorer 4 to work”.

We also started hiding and showing content with JavaScript. Sometimes we wrote it out with JS and didn’t give text browsers (or those in companies where people turned off JS by default by means of a proxy) any content or far too much to take in without caring about structure much.

When SEO started to matter we also used the NOSCRIPT tag to provide fallback text and links – most of the time laden with keywords instead of meaning.

DHTML days (2)

When CSS got supported things really took off – we could not only create dynamic things and show and hide them but really go to town moving, rotating, animating and stacking them. And that we did. DHTML library sites had hundreds of effect menus and image sliders and rotating buttons and whatnot:

Most of behaviour was done with JavaScript but we also started to play with CSS and :hover pseudo selectors to build “CSS only multi level dropdown menus” and other things that couldn’t be used without a mouse.

This was the high time of DHTML and the first line of almost any script was checking for IE and document.all or Netscape with document.layers. The speed of computers also forced us to go through all kind of dangerous hacks and tricks (dangerous as they made maintenance very hard as the hacks tended to not get documented) to make things look smooth.

The gospel (according to Zeldman)

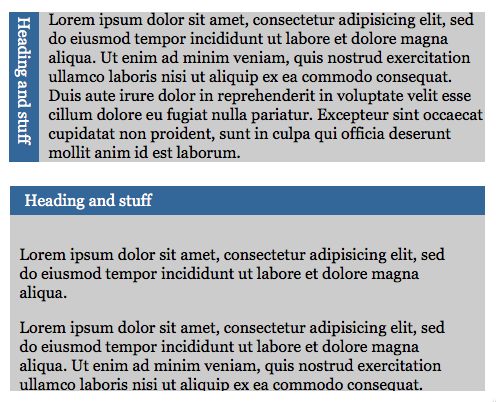

When the book of Zeldman came out this was the message: Let’s stop trying to fix things for browsers and jump through hoops to make our products work for an environment that is not to be trusted and rely on the standards instead. The main tool for this message was the separation of technologies:

HTML is the structure, CSS is for the visual look and feel and JavaScript is for behaviour. If we keep all of these things separated, then we have a good web product that is easy to maintain, works for everyone and is clean to extend and work with.

That was the idea and we took it further by coining the term Unobtrusive JavaScript (I remember having lots of fun writing this course) and subsequently DOM Scripting (with the DOM Scripting task force of the WaSP driving a lot and Jeremy Keith’s and my book giving good examples how to use it).

The state today

Nowadays it seems we have gone full circle back to the world of mixing and matching the concerns and layers of development:

With great power comes great responsibility and right now I get the feeling that the latter is very low on our radars as it is far too much fun playing with the cool new things we have at our disposal. We have mobile phones with incredibly fast processors, we have supersonic JavaScript engines capable of 3D animation and hardware accelerated CSS animations. This makes it hard to get excited about semantic values.

Almost all the technologies in the stack get washed out in this new web technology world and separation becomes much harder. What good is a CANVAS without any scripting? Should we animate in CSS or in JavaScript? Is animation behaviour or presentation? Should elements needed solely for visual effects be in the HTML or generated with JavaScript or with :after and :before in CSS?

We do a lot more on the client these days than we did in the past. It is time we give the client more credit in our best practices. Yes, old browsers are not likely to away anytime soon (and this is sometimes on purpose with for example Microsoft not offering IE upgrades to Windows XP - and soon Vista – users).

Separation of concerns vs. the image of web developers

In its meaning and approach the separation first explained by Zeldman is still an incredibly good idea – the thinking behind the separation of the different technologies is great. Some companies very much embraced the concept in their training and for example Yahoo even goes a step further in making it understandable by calling it “separation of concerns” and not layers of development.

This subtle difference also shows partly why this great idea was not always implemented in real life products: you need to have an understanding of how the different technologies work and how to write them properly. In essence, you want to have a team of people with different expert subject matters work together to build a kick-ass product.

In reality though web development is still seen as something any developer with a bit of training could do or when you hire a dedicated web development team they are considered to be experts across the board and are not allowed to concentrate on semantics, CSS or JavaScript.

This is the main reason why final web products out there do not have clear separation. In most cases, the developers are aware that they could have done a better job but they got forced to rush it or work with a technology they did not care much for. If you ever had to debug and optimise CSS written by Java developers you’ll know what I mean.

Web standards showcases and attrition

Whenever we praised a new product coming out as using web standards the right way it was not a big product. It was almost never the result of an enterprise framework or CMS. And – in a lot of cases – it was actually built to make a point about using web standards and not streamline the process of building a web product.

Take the site that most likely was the main cause for the breakthrough of CSS in the view of the community: CSS Zen Garden. The garden was a simple XHTML document, semantically correct but already with a lot of IDs and classes as handles to apply CSS rules to. It’s job was to show that by separating look and feel you can redesign a web site easily and make it look (and later on react to the user) totally different from one case to another.

This went incredibly well, until we got too excited about the possibilities we had with image replacement. Later submissions to the garden had large parts of text in background images, which was ironic as the original argument was that all the content should be in HTML.

In the real world, however, we never had a fixed HTML document to play with – we had CMS to create web pages for us and everything was in flux. You can’t control the amount of menu elements, you can’t control the amount of text, you will not be able to “simply add a class to an element” to give it some extra functionality. It is time we understood that we can inspire with showcase sites and presentations but we really don’t help the people developing web sites and fighting the concept of “everyone can do frontend, it is not hard code”.

Nobody wants to hear about the depth and composition of the sea when what we do is riding jet skis

Right now “best practice web development” talks, presentations and tutorials are incredibly self-referential. We speak to the same people about the same subjects and claim that people use semantics whilst out there the web is in a struggle to survive against walled garden development and native mobile development for a very small part of the world-wide market.

People happily say they “only build for webkit” as this is “the best and fastest and most stable browser”. People are OK to see a showcase site completely and utterly failing when you don’t have the right browser and the right OS.

We start to recede into our respective specialist areas and speak at specialist conferences. A lot that is taught at design conferences is the total opposite of what you hear at performance conferences. We build abstraction layer above abstraction layer to work around browser issues and release dozens of “miracle” libraries and scripts at conferences without even caring if anyone will ever use them.

Speed is still the main thing that we talk about. How to shave off 20 milliseconds off a script loader? How to make an animation 50fps instead of 30fps?

Best practices for a new market of developers

My favourite example was attending the Google IO accessibility talk. For about an hour we got taught how to turn an element into a button and keep it accessible. Not once was mentioned why we didn’t use a BUTTON element for the job. There was lots of great information in that talk – but all of it not needed as we simulate something the browser readily gives us with JS and CSS.

The new generation of developers we have right now are very excited about technology. We, the educators and explainers of “best practices” are tainted by years and years of being let down by browsers. jQuery and other environments propagated “write less, achieve more” as the main goal to success. Most of what we tell people is “to add this and that to give things meaning” and when they ask us “Why?” we have to come up with lies as for example not a single browser cares about the outline algorithm right now.

The only real benefit of using web standards

Using web standards means first and foremost one thing: delivering a clean, professional job. You don’t write clean markup for the browser, you don’t write it for the end users. You write it for the person who takes over the job from you. Much like you should use good grammar in a CV and not write it in crayon you can not expect to get the respect from people maintaining your code when you leave a mess that “works”.

And this is what we need to try to make new developers understand. It is about pride in delivering a clean job. Not about using the newest technology and chasing the shiny. For ourselves we have to understand that the only one who really cared about our beloved standards and separation of concerns is us – as we think maintainability and not quick deployment and continuous iteration of code. The web is not code – the web is a medium where we use a mix of technologies fit for the purpose on hand to deliver a great experience for the end users.